Governed automations that made delivery faster—and safer

Built an AI-assisted workflow layer that connected planning, code, QA, and documentation into a single repeatable delivery system. The goal: reduce manual overhead, keep artifacts current, and make every change “reviewable + auditable” across teams.

Tools connected (representative)

- Manual status chasing and repeated “where is this at?” questions.

- Docs/diagrams quickly becoming stale after releases.

- Inconsistent acceptance criteria and QA readiness.

- Fragmented observability signals during rollouts.

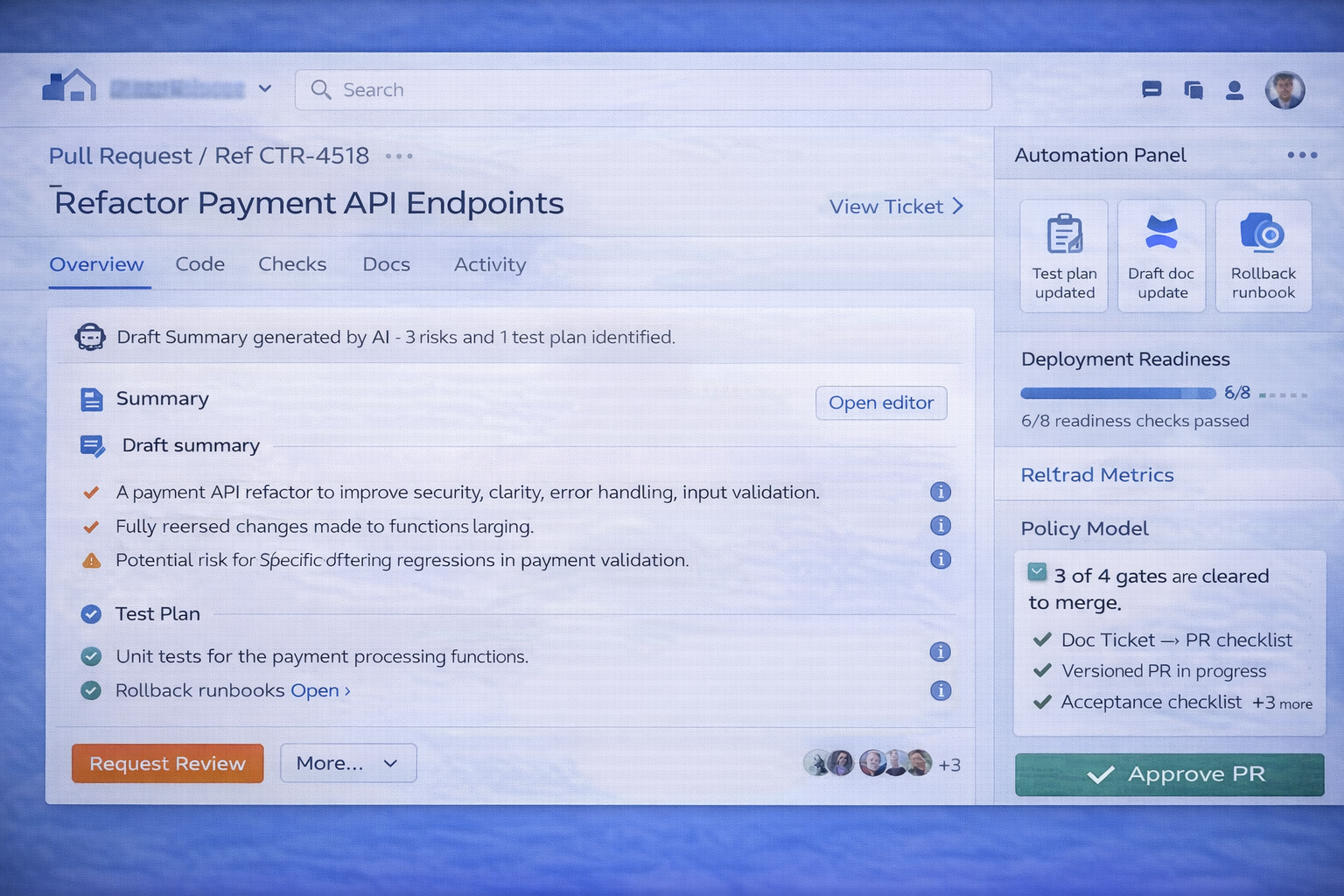

- Drafting assistants: PR summaries, test notes, release notes.

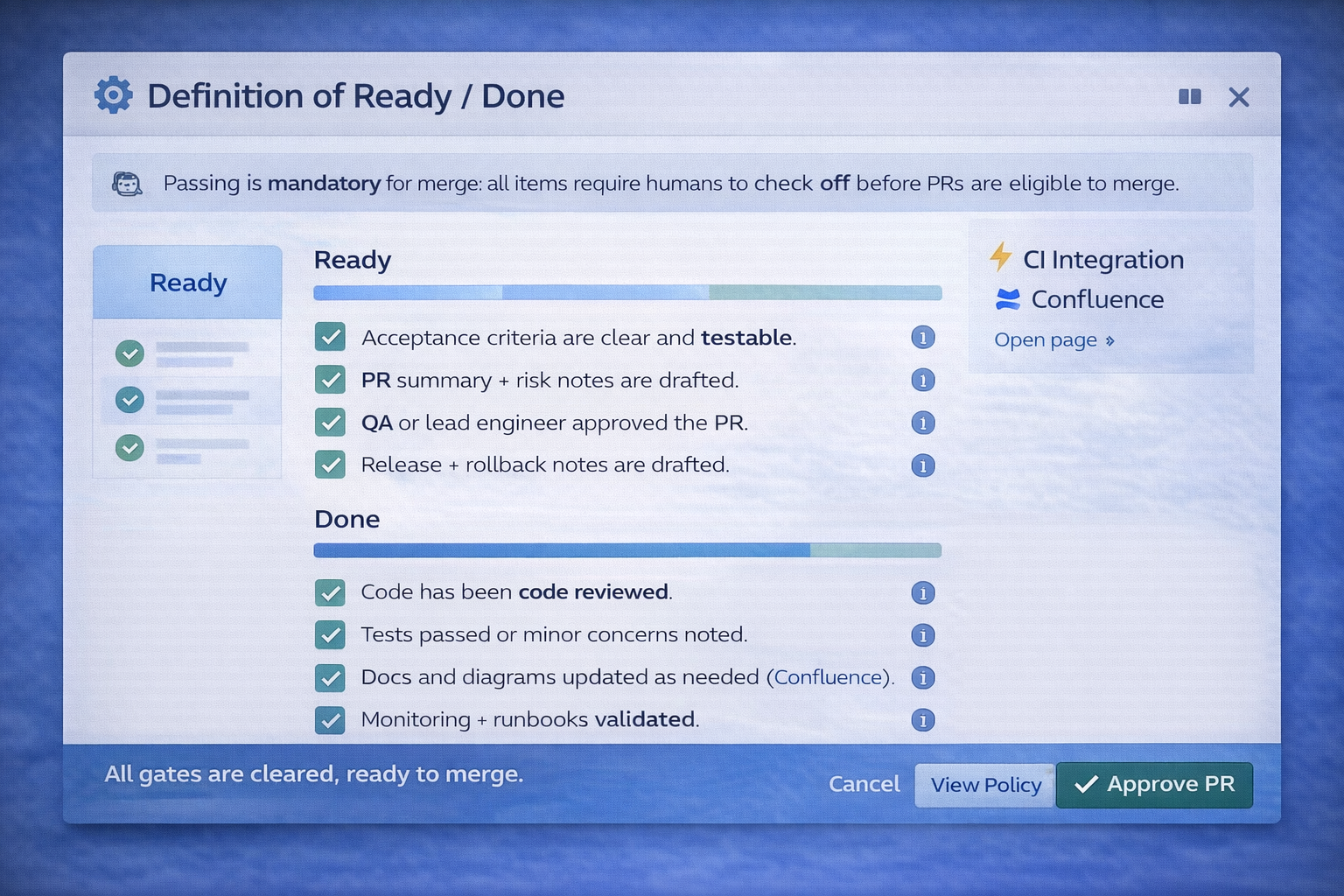

- “Definition of Ready/Done” enforcement with checklists.

- Doc/diagram updates proposed as PRs (reviewable changes).

- Deployment and incident hooks to keep context together.

Automation catalog

| Automation | What it does | Guardrail |

|---|---|---|

| Ticket → AC draft | Drafts acceptance criteria and QA scenarios from ticket context. | Requires PM/QA approval before “Ready” state. |

| PR → Release notes | Generates release notes with user impact + risk + rollback notes. | Release note PR must be approved by owner. |

| PR → Doc update PR | Suggests Confluence/doc changes as a versioned PR. | Docs only updated via reviewable changes. |

| Deploy → Observability bundle | Links dashboards, alerts, and runbooks for a release. | Standard checklist required for production deploy. |

System map (interactive)

Governed Automation Layer

Drafting + validation + approvals

Intake + Planning

Ready/Done enforcement

Code + Delivery

PR-driven artifacts

Observability + Readiness

Dashboards and runbooks in context

Governed Automation Layer

Workflow (end-to-end)

Automation drafts acceptance criteria, QA scenarios, and release notes skeleton using approved templates.

PR summary, risks, and test plan are drafted and must be confirmed by the developer + QA.

Lint/tests/quality gates run; missing artifacts block merging (unless explicitly waived with reason).

Release notes finalized; dashboards and runbooks linked; post-deploy monitoring checklist executed.

Post-release notes capture incidents, regressions, and follow-ups so the system improves over time.

Governance & safety model

| Control | Rule | Why it matters |

|---|---|---|

| Approval gates | Automation can draft; humans approve. | Prevents silent changes and “automation drift.” |

| Audit trail | Every action links to a ticket/PR and an owner. | Enables accountability and compliance posture. |

| Policy waivers | Any bypass requires a reason + reviewer sign-off. | Balances speed with transparency. |

| Secrets & scopes | Least-privilege tokens and scoped access. | Reduces security risk across integrations. |

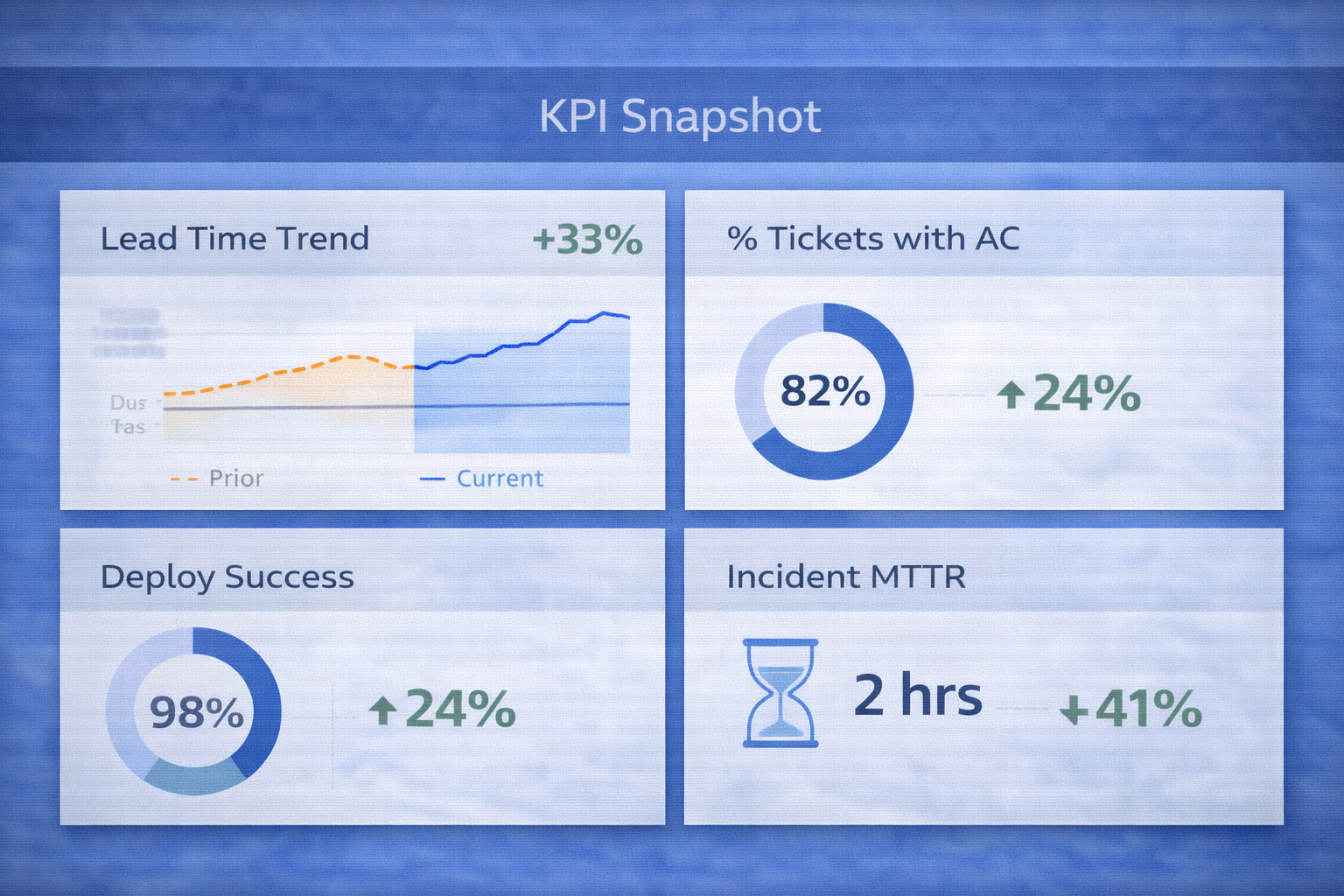

Impact (replace with your metrics)

| Outcome | What improved |

|---|---|

| Less manual overhead | Standard artifacts drafted automatically; less time spent rewriting context. |

| Higher QA readiness | Fewer missing acceptance criteria and test notes at merge time. |

| Docs don’t rot | Doc/diagram updates shipped as part of the same PR workflow. |

| Better release confidence | Deploy checklists + observability bundles reduced “unknowns.” |

My leadership

- Turned “AI ideas” into a governed system engineers trusted.

- Designed policy gates and audit trails to prevent risky automation.

- Aligned PM/QA/Eng on readiness standards and artifact ownership.

- Kept the system pragmatic: measurable wins, incremental adoption.

- Draft → approve → apply workflow (no silent edits)

- Idempotent integration patterns + scoped access

- PR-driven documentation to keep artifacts current

- Release + observability bundled as one deliverable